No matter how often I jump into a new technology, the number of new concepts, acronyms, and buzzwords always sends my brain into overdrive. What do these AI terms mean? Where is that concept used? It’s like getting lost, bouncing from one YouTube video to the next. I want to understand everything fully, but there are so many starting points.

I’ve been so focused on Artificial Intelligence (AI) this last year. I’ve experimented, read technical books, taken a postgraduate course, and done real-world implementations for our clients. During that time, I made notes of all the useful acronyms and concepts until they were drilled into my brain.

When I heard others on my team trying to learn and understand the terms (and sometimes using them incorrectly), I realized it would be cool to organize my notes to help others get a jump start. A sort of “cheat sheet,” if you will.

So, I built it out and sent it over to my content team, who immediately wanted me to alphabetize it for “easy reference.” But as you can see, it is NOT alphabetized. My cheat sheet was not meant to be an A to Z guide for people to look up a single term but a way for folks to understand and get caught up with AI.

To learn AI, I’ve found that so many terms build off the next, so that is exactly how this cheat sheet reads. So, please follow along from beginning to end, and by the time you get to the last term, give yourself an air-high five and imagine I’m on the other end.

AI Terms You Need To Know

ML / Machine Learning

Unlike traditional programming that involves writing fixed sets of rules, machine learning is a subset of AI where computers learn from data to make predictions or decisions. It allows systems to improve performance over time as they are exposed to more data.

DL / Deep Learning

A further subset of machine learning. It uses neural networks with many layers to analyze various forms of data and extract complex patterns. It’s much more sophisticated than traditional ML but requires much more data and processing power.

ANN / Artificial Neural Network

A conceptual framework and model structure that mimics the human brain’s structure and function to improve decision-making and predictive accuracy. Specifically, it consists of interconnected layers of nodes (neurons) that process and transmit information. They are used to recognize patterns, make decisions, and predict outcomes by learning from data through a process called training.

Model

A type of mathematical model that, after being trained on a data set, can be used to make predictions or classifications on new data. A model can refer to a general model and its learning algorithm or a fully trained model with all its internal parameters tuned.

Inference

Using a trained model to make predictions or decisions against new, unseen data.

Training

A process where a learning algorithm adjusts a model’s internal parameters to minimize errors in its predictions.

Parameters

The internal variables of a trained model whose values are learned from the training data. Training the same initial model on different datasets will produce different parameter values. There are two types of parameters called weights and biases.

Hyperparameter

A value that is set before training begins to control the learning process. Unlike normal parameters, these values are not learned from the training data.

Architecture

Architecture defines the blueprint for how a model is built, prior to it being trained. It’s part of the design and structure of a neural network, including the number and types of layers, connections between neurons, and methods for processing data. Different architectures are better suited to different tasks.

Task

The specific problem a model is trying to solve. Some examples are classification, regression, NLP, vision, and speech recognition.

Generative AI

A class of AI models designed to create new content, such as text, images, music, or even videos, based on patterns learned from existing data. These models generate new data that is similar to the training data they were exposed to based on an input prompt. Generative AI is closely related to NLP, often overlapping with language generation tasks.

NLP / Natural Language Processing

A range of tasks that enable machines to understand, interpret, and generate human language. The most common examples are:

Text classification – Categorizing or assigning predefined labels to textual data based on its content.

Document summarization – Creating a concise and coherent summary of a longer text document.

Sentiment analysis – Identifying opinions or emotions in text as positive, negative, or neutral.

Machine translation – Converting text from one language to another.

Named entity recognition – Identifying and classifying named entities such as people, organizations, locations, and dates within a text.

LLM / Large Language Model

Models that are designed to perform NLP tasks by leveraging their large-scale architectures and vast amounts of training data. Examples are Google’s BERT and OpenAI’s GPT 4o.

Corpus

Refers to the large set of text used for training and evaluating a language model. The Wikipedia data set is an example corpus.

Prompt Engineering

Process of designing and refining the input prompt that gets passed to a language model. It involves crafting the prompts in such a way that the model can understand the task and generate the desired output effectively.

Tokens

When a prompt is provided to a model for an NLP task, this text is first broken down into smaller pieces called tokens in a process called tokenization. These tokens are words, parts of words, or even single characters. These tokens are what the model processes for the task.

Context Window

The range of tokens that a model can consider at one time when processing or generating text. This size influences the model’s ability to understand and utilize the surrounding context to make accurate predictions or generate responses. For a simple example, suppose a context window was only 100 tokens, and the input provided was 500 tokens. The model would only consider the first 100 tokens for its processing.

Sliding Context Window

When the number of input tokens of a prompt is larger than the model’s available context window, a sliding context window can be used to process all input tokens, chunks at time. For a simple example, suppose a context window was only 100 tokens and the input provided was 500 tokens. Tokens 1 – 100 could be processed, followed by 101 – 200, followed by 201 – 300, etc. This is useful for NLP tasks with very large inputs, like document summarization or chats.

Pre-trained Model

A model that has already been trained on an extensive data set for a general task. Usually, when people talk about a pre-trained model, it’s in the context of re-using that pre-trained model for a new specific task.

Fine-Tuning

Taking a pre-trained model and doing a much smaller training set to adjust its parameters slightly on a new, smaller, task-specific dataset. Fine-tuning is much more cost-effective than building and training a model from scratch.

For example, suppose we had a pre-trained model trained on an extensive data set to provide sentiment analysis. We could take that model and fine-tune it on the IMDB database of reviews to create a model specifically tuned to perform sentiment analysis on movie reviews. Theoretically, this new model would result in much better accuracy for movie reviews.

Adapter

A technique used to fine-tune pre-trained models efficiently. This is done by adding small task-specific layers (adapters) between the layers of a pre-trained model, then only training these adapters and keeping the original model parameters unchanged.

Transformer

A specific deep learning model architecture introduced in 2017 has become the foundation for many state-of-the-art models in NLP, such as BERT, GPT, T5, and Mistral. Transformers make heavy use of the attention mechanism to capture word context much better than prior model architectures. Additionally, it is designed to be parallelizable at every stage to support the training of much larger models.

BERT / Bidirectional Encoder Representations from Transformers

Focuses on bidirectional context to achieve state-of-the-art performance on many NLP tasks. Imagine reading a sentence left to right and right to left simultaneously. This type of “reading” allows BERT to understand the full context of each word better than if it were only reading in one direction.

BERT is not designed to generate text but excels at extracting information from text. These extractions include classification, summarization, question answering, sentiment analysis, and named entity recognition. Google developed this transformer, and it’s well-known BERT model versions are BERT Base, BERT Large, DistilBERT, BioBERT, and ClinicalBERT.

GPT / Generative Pre-trained Transformer

Uses a unidirectional transformer for generating coherent and contextually relevant text. Since it reads text from left to right, it excels at predicting each word based on the words that came before it.

It generates text by predicting the next word in a sentence, one word at a time. GPT is designed to generate text and excels at story generation and conversational agents. OpenAI developed this transformer, and its more well-known GPT model versions are GPT-1/2/3/4o, GPT-Neo, and GPT-J.

T5 / Text-To-Text Transfer Transformer

Treats all NLP tasks as text-to-text problems, allowing a single model to be fine-tuned on various tasks. This means that it takes text as input and produces text as output. Google developed this transformer, and its well-known T5 model versions are T5 Base, T5 Large, mT5, T5 1.1, and Flan-T5.

Mistral

A series of models that combine text understanding and generation for NLP tasks. Mistral AI developed this transformer, and some well-known model versions are Mistral and Mistral Instruct.

Attention

Mechanism in neural networks, particularly transformers, that assigns varying degrees of importance to different parts of the input sequence when processing each token and making predictions. This helps to prioritize relevant information and improve performance.

Zero-shot, One-shot, Few-shot Learning/Prompting

When we define it in regards to learning, this is when the model performs tasks having seen no (Zero-shot), one (Single-shot), or few (Few-shot) specific examples during training. When we define it in regards to prompting, this is when the model is given no (Zero-shot), one (Single-shot), or few (Few-shot) examples of how to perform the task/return the expected output.

Multi-Modal

Models designed to handle and integrate multiple types of data (modalities), such as text, images, audio, and video. For example, there can be handling text data, outputting an image, or taking in speech and producing text.

RAG / Retrieval Augmented Generation

A model architecture developed by Facebook AI (now Meta AI) that combines retrieval-based methods with generative models to improve the quality and accuracy of generated text.

When an input prompt is processed, passages or relevant documents that likely contain the information needed to answer the query are first retrieved. Then, the query and retrieved documents are input to generate the response, incorporating the relevant information from the retrieved documents. RAG can leverage large external databases, allowing models to access vast information and generate responses against data they were not trained initially against.

Reinforcement Learning

A type of machine learning where an agent learns to make decisions by performing actions and receiving feedback in the form of penalties or rewards. It uses this feedback to learn and improve over time. In this way, it’s like fine-tuning a model to better its performance. However, it’s computationally more expensive and is employed for sequential decision-making tasks (like games or robotics).

AI Agent

Software that autonomously interacts with its environment makes decisions and takes actions to achieve its goals. It uses AI models to understand the input data (textual prompt, image, spoken language, etc.) and to make decisions (predict outcomes, evaluate possible actions, and select the best course of action). The AI agent software then carries out the course of action.

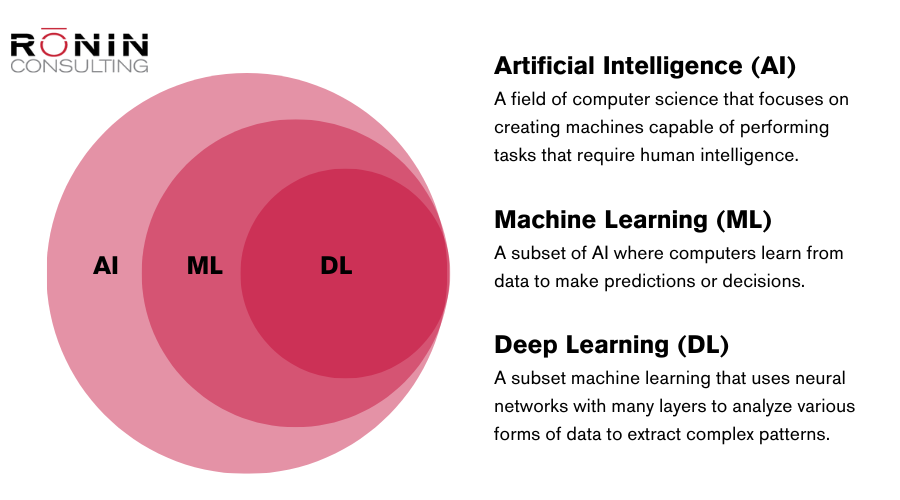

A Diagram of AI Terms

Along with the AI terms cheat sheet, I created a few diagrams during my postgraduate course on AI, ML, and DL. I found these very helpful in guiding me through the concepts when I started learning about AI.

In the diagram above, the first set of circles shows how Deep Learning is a subset of Machine Learning, and they are both subsets of Artificial Intelligence.

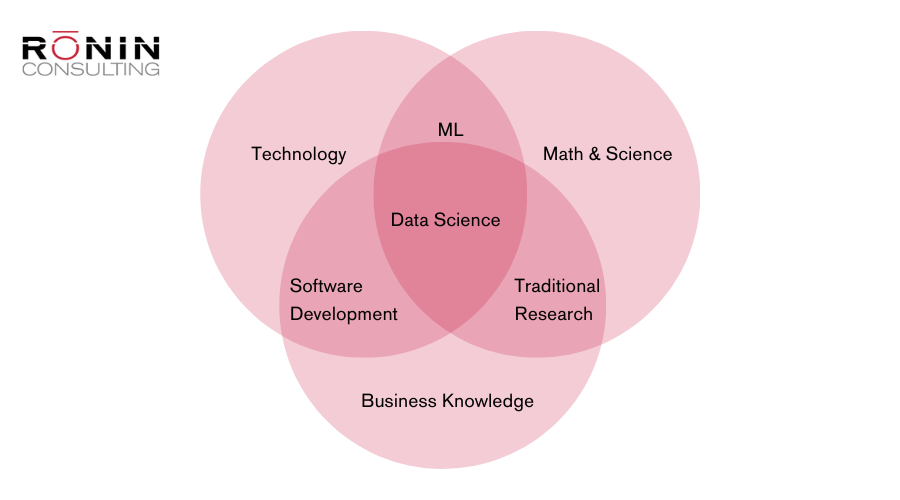

Early on, I was unclear how Computer Science and Math correlated exactly with Data Science, so I created this Venn diagram of the various fields of study that comprise Data Science.

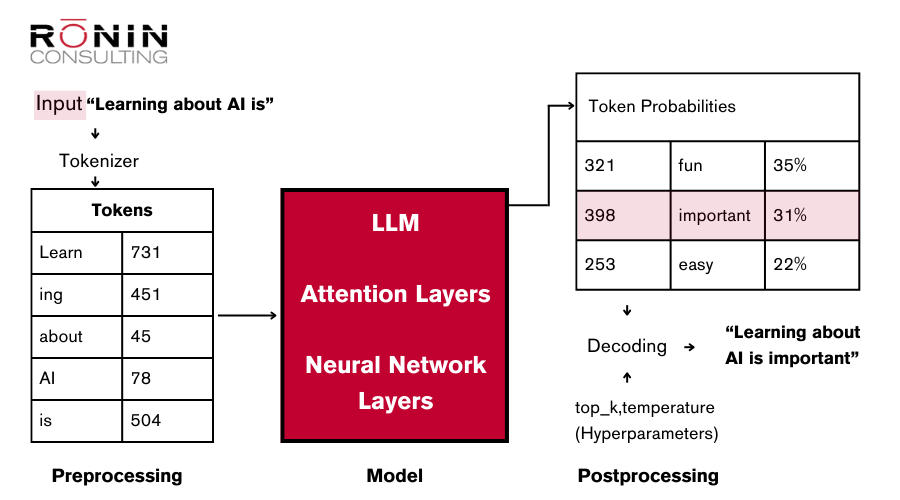

Inference Pipeline

The above diagram is admittedly simplified but demonstrates the relationships between some of the model architecture topics. The inference pipeline shows where input text is converted to tokens, the next token probabilities are predicted, and those probabilities are finally decoded into the next predicted word. Creativity vs. stability can be controlled with top_k and temperature (two example hyperparameters).

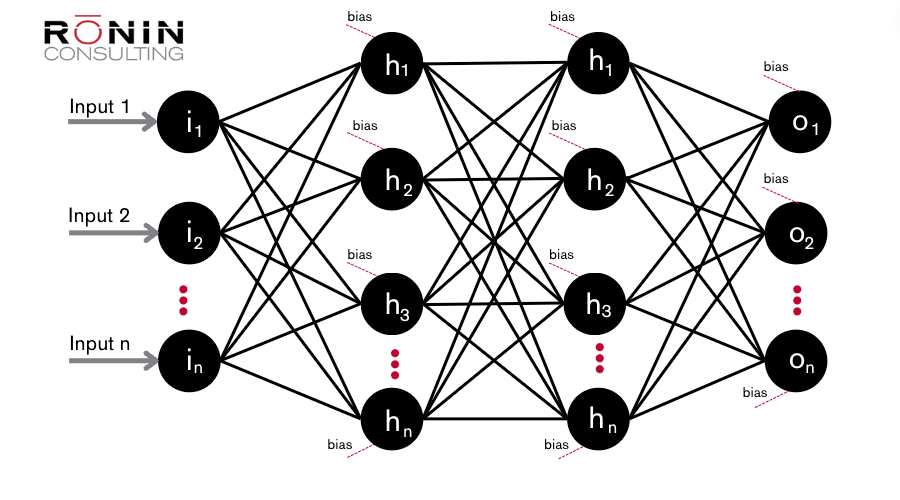

Neural Network Example

Although every Neural Network is different, this diagram represents a few key components. Here we can see the inputs (e.g. tokens values, pixel values, category values, etc) being passed to the input layer. Every circle represents a neuron. Neurons pass their output value to the next layer. In this example we have the input layer, the output layer, and two hidden layers (layers between input and output).

It is important to note the parameters here. Parameters are divided into two categories, weights, and biases. The values for these are learned during training of the model. Every black line really represents two things: the output of the neuron and a learned weight (the strength of the connection). The red lines represent a bias (an overall adjustment of that neuron). Every black line is 2 parameters, and every red line is 1 parameter.

As you can imagine, the more neurons and layers you have, the more parameters the model will have. As the number of trainable parameters goes up, more complex relationships can be captured.

Don't Be Sad - There Will Be More AI Terms!

Just as new trends in technology and AI are constantly evolving, so will this document. It is a living document, and I will update it with new terms as they emerge. If you feel I’ve missed something or if you want to collaborate with our team on your next AI project, please don’t hesitate to contact us.

I truly hope you find this helpful!

Ryan Kettrey